Keras, on the other hand, is a high-level neural networks API written in Python that allows for easy and fast prototyping of deep learning models. One of the key advantages of Horovod is its ability to scale linearly with the number of GPUs, which means that the training time can be significantly reduced as more GPUs are added to the system. Horovod supports popular deep learning frameworks such as TensorFlow, PyTorch, and Apache MXNet, making it a versatile choice for a wide range of applications. It is built on top of the Message Passing Interface (MPI) and leverages advanced optimization techniques to achieve high-performance communication between GPUs.

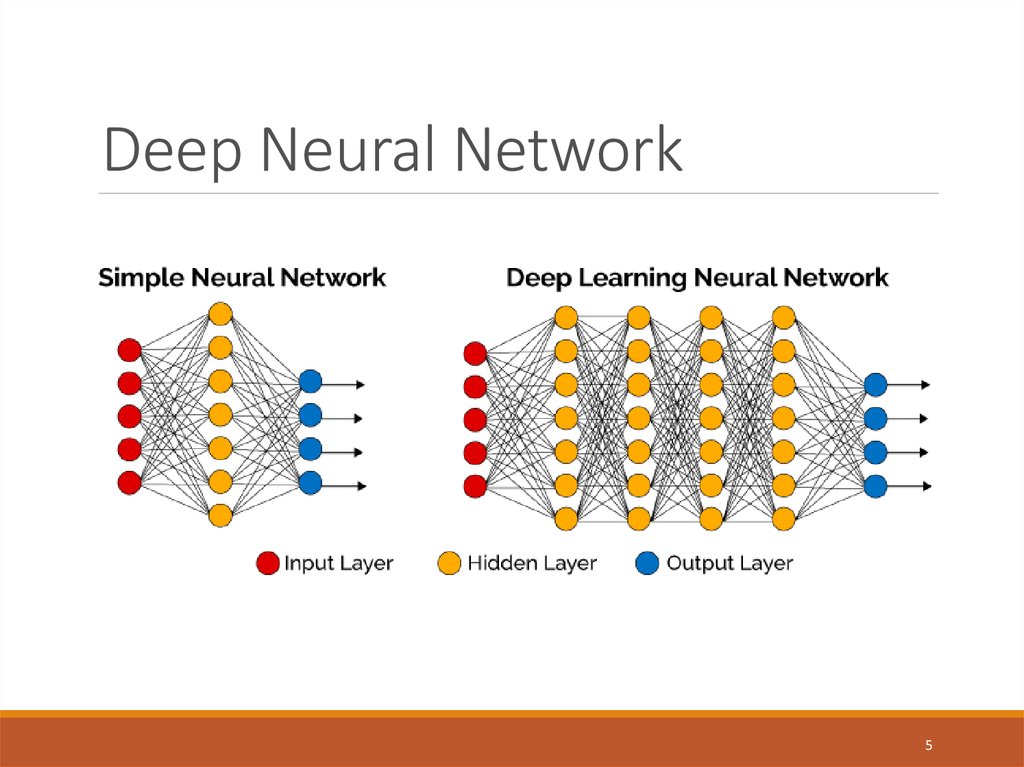

Horovod, developed by Uber, is designed to make distributed deep learning fast and easy to use. In combination with Keras, a popular high-level neural networks API, Horovod enables researchers and practitioners to develop and train complex deep learning models with ease and efficiency. In this context, Horovod, an open-source distributed deep learning framework, has gained significant attention for its ability to scale deep learning models across multiple GPUs and compute nodes. With the rapid growth of data and the increasing complexity of models, the need for efficient and scalable training methods has become more critical than ever. Exploring Distributed Deep Learning with Horovod and Keras: Techniques and Applicationsĭistributed deep learning has emerged as a powerful technique for training large-scale neural networks in recent years.

0 kommentar(er)

0 kommentar(er)